Augmenting Proto AI with Generative AI

How to augment Proto AI with ChatGPT generative features

Before starting, make sure you have a OpenAI account and the OpenAI API key available.

With all the current buzz around generative AI technologies such as OpenAI's ChatGPT, you may be curious about ways to leverage such technology to augment the capabilities of Proto's natural language processing engine, Hermes AI™.

And you now can! This doc shows how to go about doing so, in addition to best practices for optimal, business-focused generative query resolutions.

Background

First, let's get some clarity on what generative AI is all about, its purpose, and what it may mean for CX automation.

Generative AI provides a clever way to generate useful content based on specific inputs – such content could be in various formats such as images, text, audio, and more.

Proto's Hermes AI™ works by utilising Natural Language Processing (NLP) to understand user queries (intents) and respond accordingly. In order to do this, intent phrases and respective responses must be created in the platform for each user query, at which point the system tries to match the users' query to the most relevant pre-existing intent.

When the user query doesn't match a pre-existing intent, the system responds with a default fallback (known as "Filler block" in Proto platform) – leading to chatbot responses like "sorry, I don't quite understand your query, please try rephrase it."

This is where ChatGPT and its generative AI capabilities come in: we can now use ChatGPT to handle cases where Proto's Hermes AI may fail (for example, when a user asks a question with extremely long text content).

Prerequisite

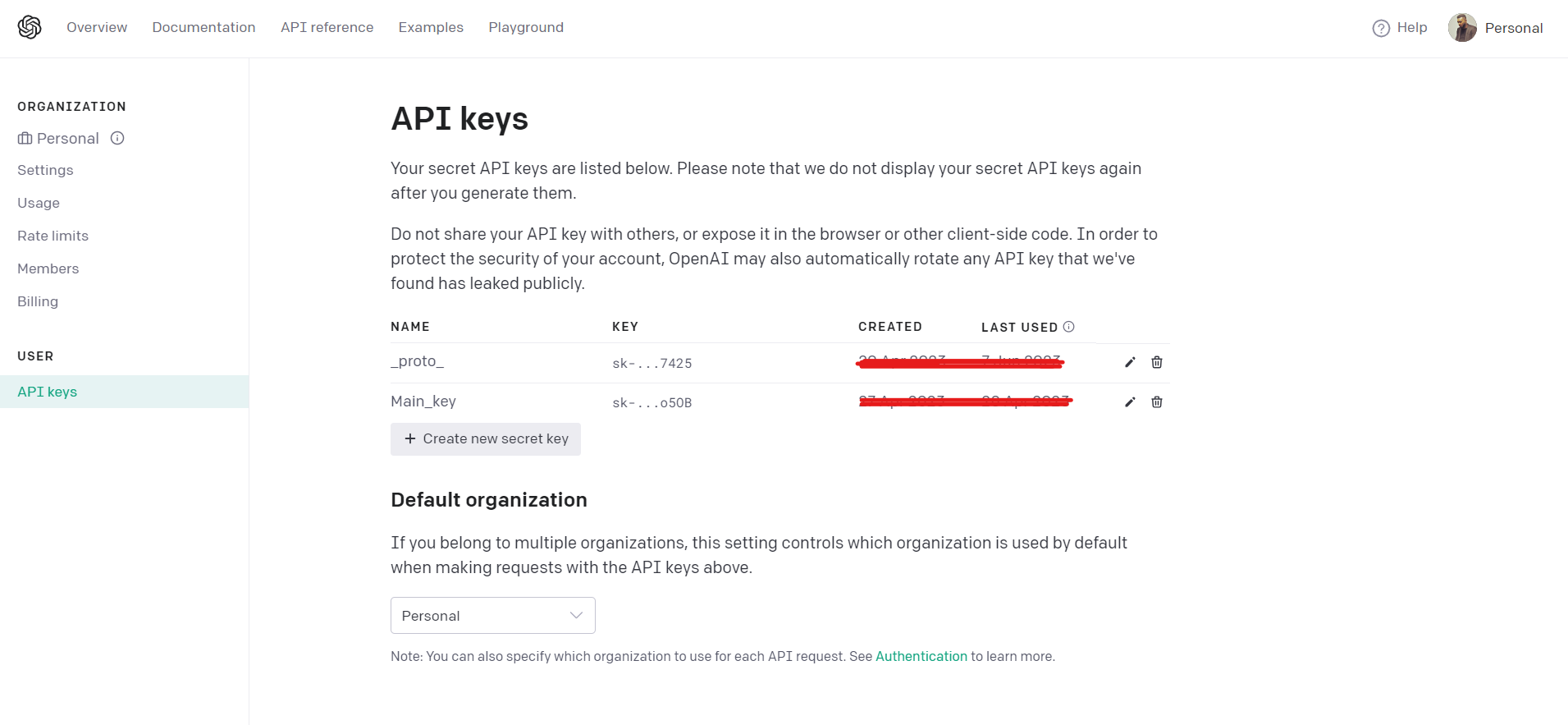

To get started, you'll need to have an OpenAI API key.

You can get this by signing up for an OpenAI account. If you don't have one, you can sign up here: LINK HERE

Once signed in to your OpenAI dashboard, simply follow these steps to get your API key:

- Click on your profile avatar on the top right

- Select view API keys from the drop-down menu

- Click Create new secret key

- Give your key a name, submit it, and copy the token string generated for you. You will not be able to see this token string again, so be sure to copy and save somewhere immediately - note that you will still able to create more new secret keys later if needed.

Enabling GPT for default response (filler block)

Please note that Proto is able to directly set up this GPT feature for its clients, saving you time following the steps below. To request this, please email: "[email protected]" with subject line "Activate GPT"

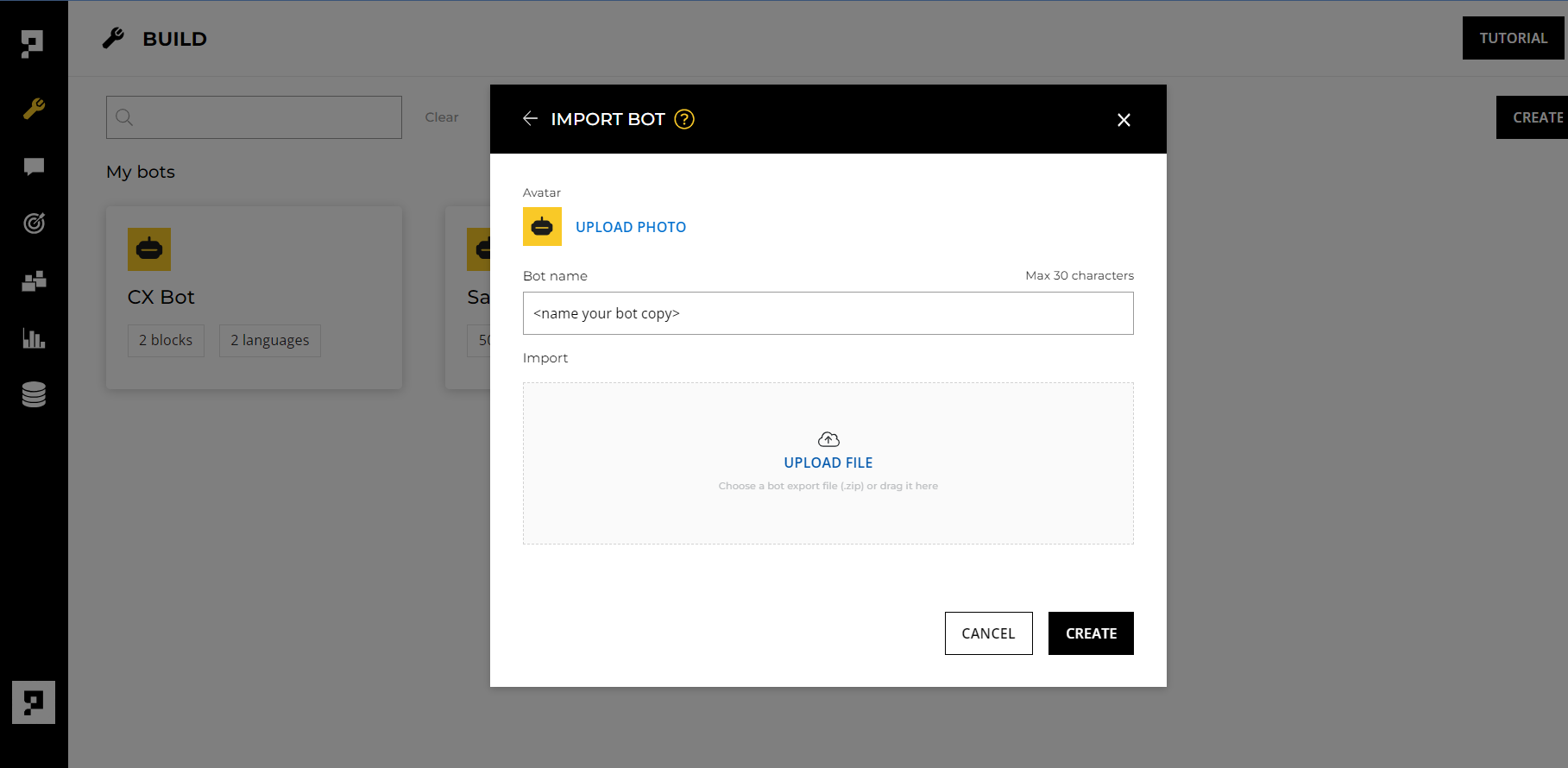

To augment your chatbot with ChatGPT, you'll need to clone a set of bot blocks into your existing Proto chatbot in BUILD. When you do, you'll need to make some changes to the cloned bot content.

To get started, follow the link here to download a copy of the GPT bot structure [ZIP File]. Upload and replicate those bot blocks in your Proto chatbot if you already have one. Then, you'll be able to edit the content of the bot structure.

To clone the above bot structure [ZIP file linked above] into your Proto workspace:

- Download the ZIP file to your computer

- In BUILD tab, select CREATE BOT > IMPORT BOT > Name your bot clone > Upload the ZIP file > CREATE

- After cloning, you can then replicate the same bot structure in your bot's pre-existing FILLER block

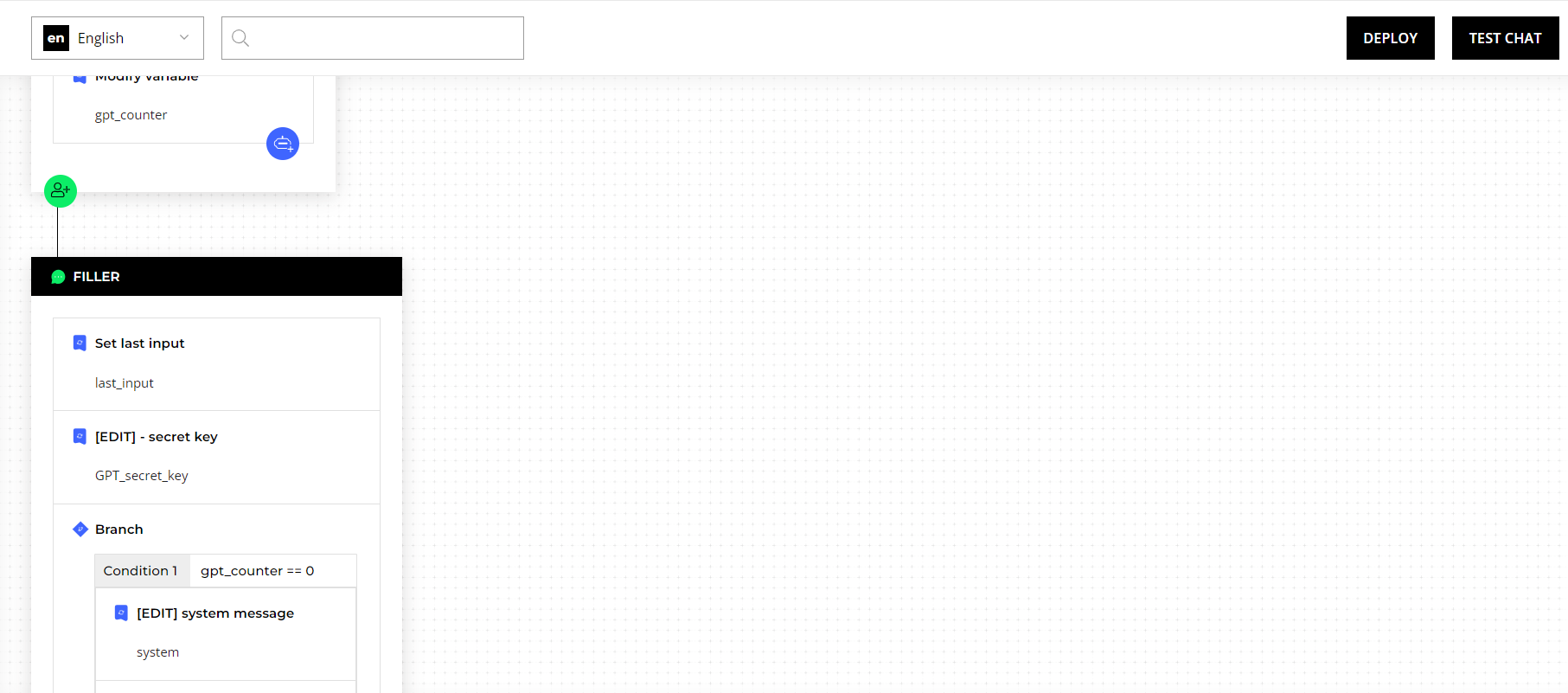

After the bot blocks are configured in your chatbot build, you'll then need to come in and add some custom info into the bot to go live; namely:

- Your OpenAI secret key: simply do so by selecting and editing the content of the "[EDIT] - secret key" block, and change the content to your own OpenAI secret key from the previous steps.

- Your business info: sets of instructions and a guide for GPT to follow whilst answering users' queries.

Since you'll mostly need GPT to ONLY answer queries related to your business, you should only change the content that is business-based (currently set to Proto's business info), and leave the instruction-based text content as is.

Select and open the "[Edit] system message" block in your cloned structure. Then, change the business info and FAQs and tailor the text to your own business (you can leave the other instructive text in the content as is).

This sets GPT to respond to queries related to your business and reject generic queries (such as "What's the weather today").

At this point, you should test your chatbot by asking it queries that exist in the GPT System message you edited. It is also a good idea to test your bot with queries that are not contained in your set system message, to see GPT response behaviours.

Tips and best practices

It is important to note that this current setup utilises the GPT 3.5 Turbo model from OpenAI. This model allows for multi-turn functionality, enabling GPT to respond to user queries based on their previous message.

Eg. User query........................... "What does Proto do?"

Bot response (via GPT).... "Proto is an AI customer experience provider that...."

User query..........................."And who owns it"

Bot response (via GPT)...."Proto was founded by...."

This way, the chatbot (via GPT) is able to know that "it" here refers to Proto, as per the user's prior message.

For best GPT performance, add as much of your business information in the System message following the set text structure.

It is important to be aware that GPT 3.5 Turbo model has a Token limit of 4,096 tokens (OpenAI measures interactions by tokens - you can learn more HERE), which is a combined token total of both your input (ie. your set System message and the user's query) and the GPT's response (ie. GPT answer to the user's query).

Hence, it is advisable to keep your System message under 3700 tokens at the most. This should allow room for 396 tokens (ie. 4,096 minus 3700 tokens) for the user's query and GPT's response.

- To calculate your system message tokens, simply copy your whole system message and paste it into this Open AI Token calculator tool HERE

Updated over 1 year ago